A numerically exact low-order scaling method is supported for large-scale calculations [75].

The computational effort of the method scales as O(

![]() ), O(

), O(![]() ), and O(

), and O(![]() )

for one, two, and three dimensional systems, respectively, where

)

for one, two, and three dimensional systems, respectively, where ![]() is the number of basis functions.

Unlike O(

is the number of basis functions.

Unlike O(![]() ) methods developed so far the approach is a numerically exact alternative to conventional

O(

) methods developed so far the approach is a numerically exact alternative to conventional

O(![]() ) diagonalization schemes in spite of the low-order scaling, and can be applicable to

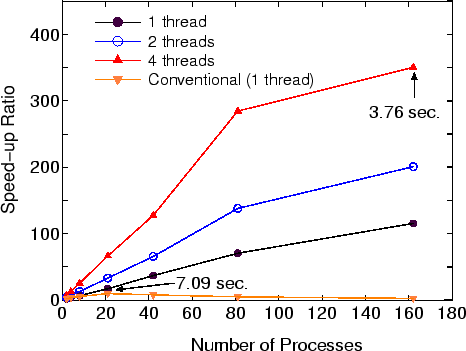

not only insulating but also metallic systems in a single framework. The well separated data

structure is suitable for the massively parallel computation as shown in Fig. 35.

However, the advantage of the method can be obtained

only when a large number of cores are used for parallelization, since the prefactor of computational

efforts can be large. When you calculate low-dimensional large-scale systems using a large number of

cores, the method can be a proper choice. To choose the method for diagonzalization, you can specify

the keyword 'scf.EigenvalueSolver' as

) diagonalization schemes in spite of the low-order scaling, and can be applicable to

not only insulating but also metallic systems in a single framework. The well separated data

structure is suitable for the massively parallel computation as shown in Fig. 35.

However, the advantage of the method can be obtained

only when a large number of cores are used for parallelization, since the prefactor of computational

efforts can be large. When you calculate low-dimensional large-scale systems using a large number of

cores, the method can be a proper choice. To choose the method for diagonzalization, you can specify

the keyword 'scf.EigenvalueSolver' as

scf.EigenvalueSolver cluster2

The method is supported only for colliear DFT calculations of cluster systems or periodic systems with

the

scf.Npoles.ON2 90

The number of poles to achieve convergence does not depend on the size of system [75],

but depends on the spectrum radius of system. If the electronic temperature more 300 K is used,

100 poles is enough to get sufficient convergence for the total energy and forces.

As an illustration, we show a calculation by the numerically exact low-order scaling method

using an input file 'C60_LO.dat' stored in the directorty 'work'.

% mpirun -np 8 openmx C60_LO.dat

As shown in Table 7, the total energy by the low-order scaling method

is equivalent to that by the conventional method within double precision, while

the computational time is much longer than that of the conventional method for such a

small system. We expect that the crossing point between the low-order scaling

and the conventional methods with respect to computational time is located at around 300 atoms

when using more than 100 cores for the parallel computation, although it depends on

the dimensionality of system.

|